Bigger Smarter Data

Extracting, Modeling and Linking Data for Literary History

Christof Schöch

(Trier University, Germany)

Korea University

Seoul, South Korea

23 May 2024

Introduction

Thanks

Seung-eun Lee of Korea University / Department of Korean Language and Literature / Humanities Utmost Sharing System, and Byungjun Kim, KAIST, on behalf of KADH (Korean Association for Digital Humanities).

The Ministry for Research, Education and Culture of Rhineland-Palatinate, Germany, for funding this research (Mining and Modeling Text, 2019-2023)

Thanks to all the project contributors: Maria Hinzmann, Matthias Bremm, Tinghui Duan, Anne Klee, Johanna Konstanciak, Julia Röttgermann and many others.

Overview

Bigger Smarter Data:

Linked Open Data

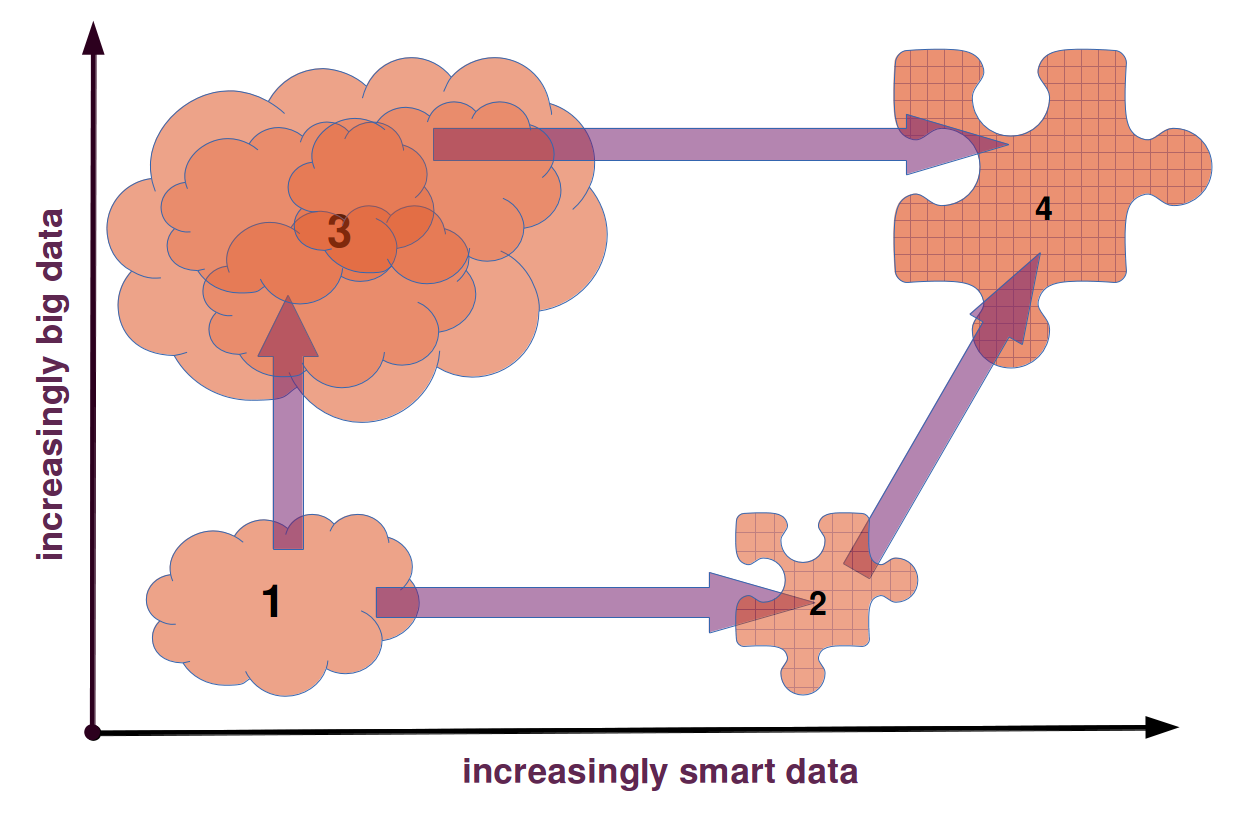

Three Modes of Data (and Digital Humanities)

- Qualitative DH:

- Datasets are typically small, curated, heavily annotated, flawless, specialized (‘smart data’)

- Prototype: digital scholarly editions, e.g. Goethe, Faust Edition

- Quantitative DH:

- Datasets are typically large, scraped, unannotated, with errors and biases, generic (‘big data’)

- Prototype: Large Language Models trained on lots of text, e.g. ChatGPT

Third Way: Bigger Smarter Data

Background: What is Machine Learning?

- Fundamentally, ML involves detecting relations between features and labels

- Features that we can observe in data

- Labels, classes, or values that are relevant to our research

- We use this approach primarily for information retrieval

- We start from a text collection

- We may annotate part of the data

- Then train a new model, or use an existing model

- Evaluate the performance of the model on the annotated data

- And then derive labels, classes, values from the unannotated text

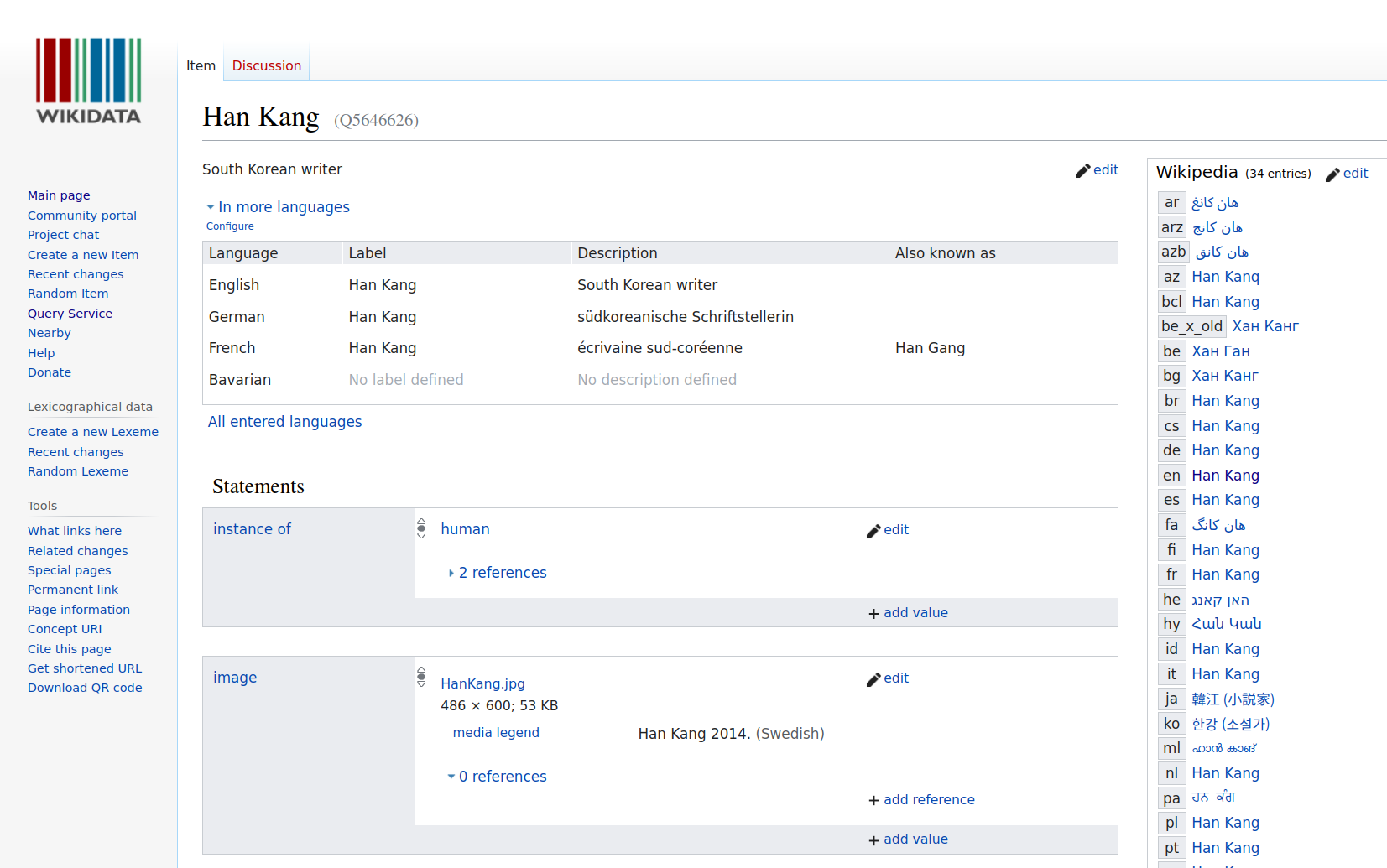

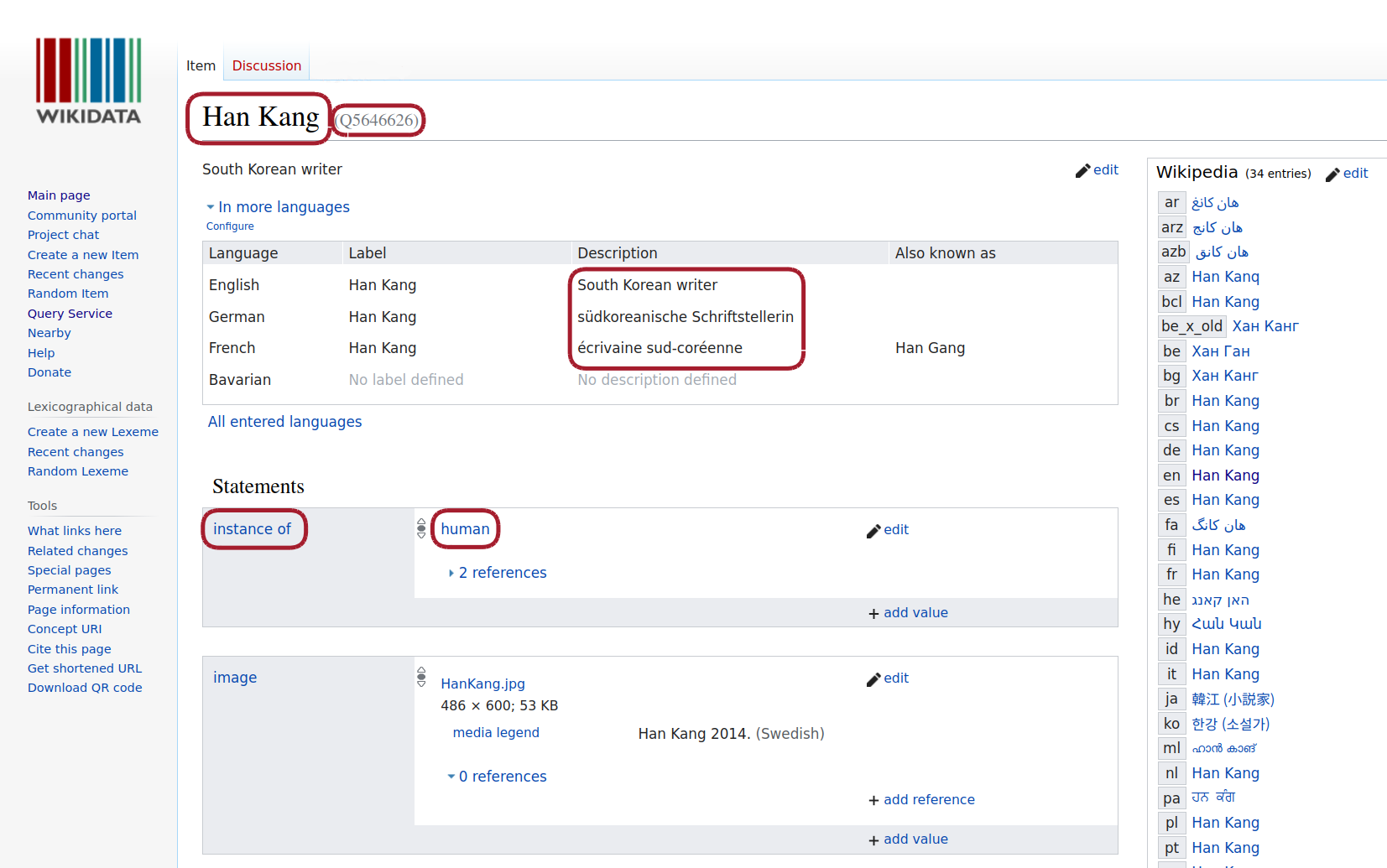

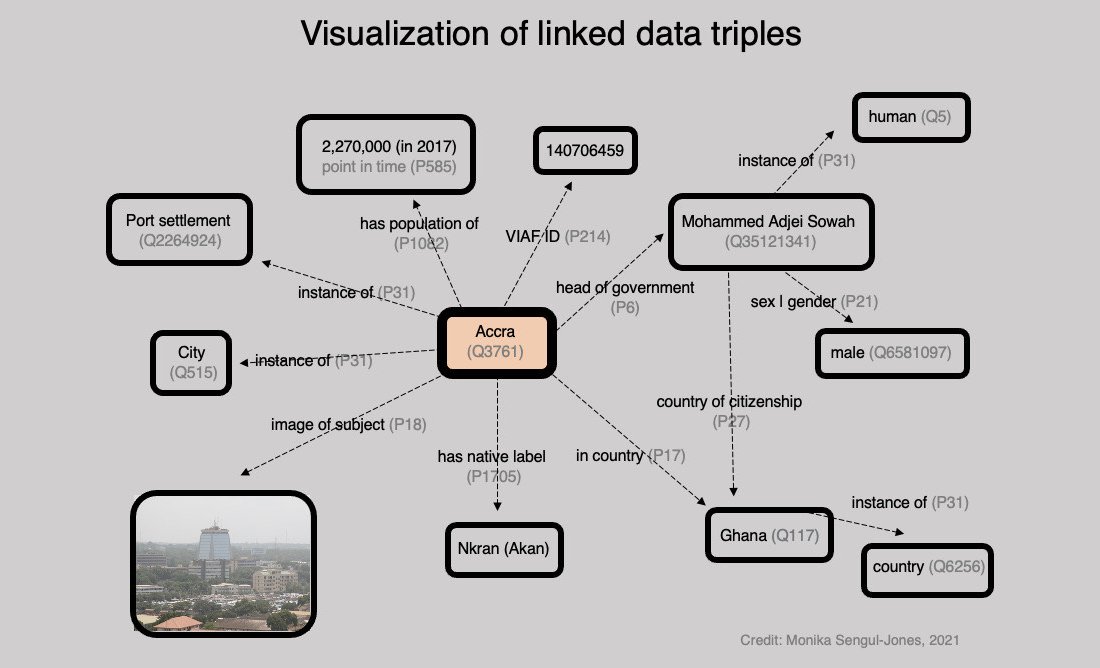

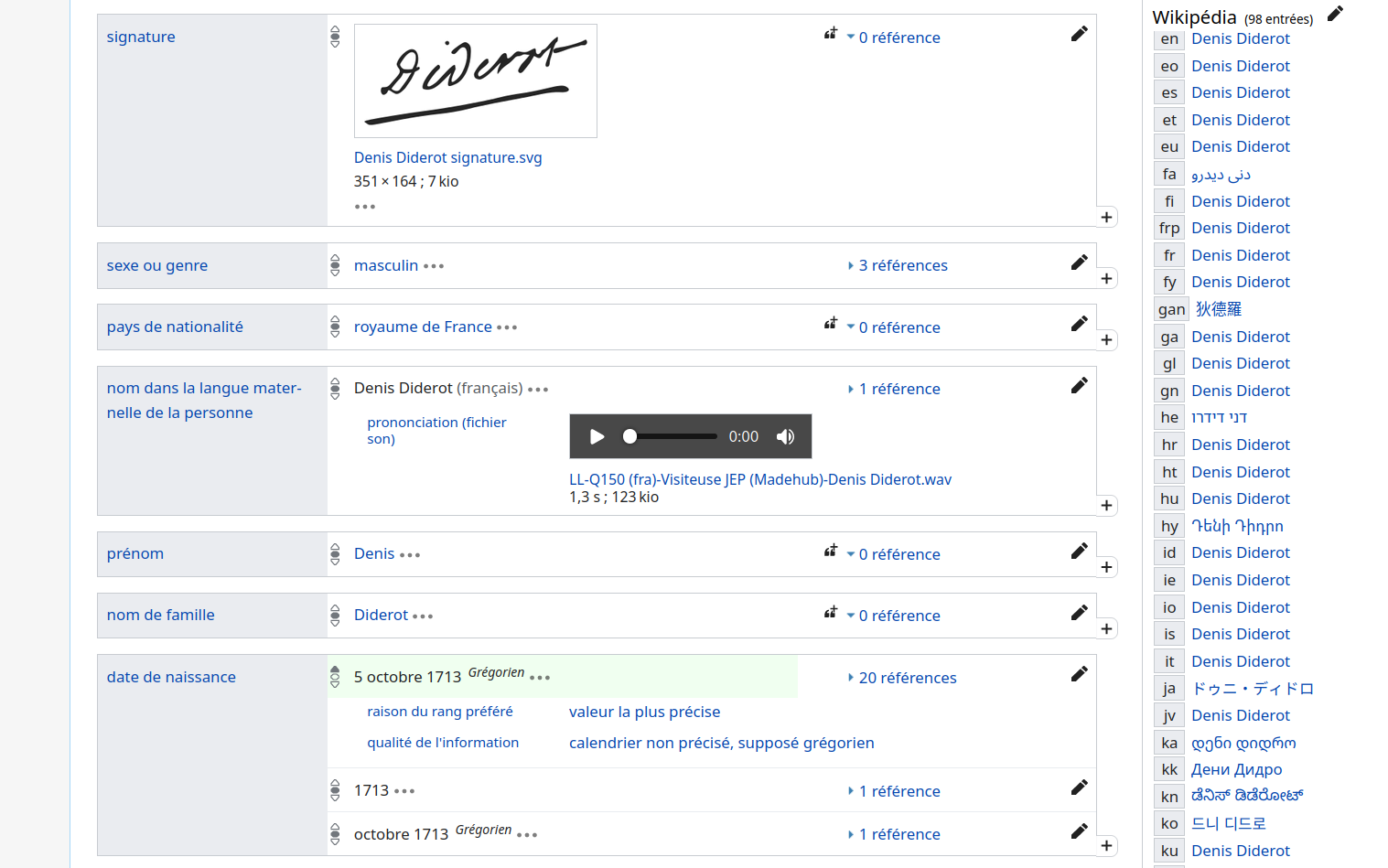

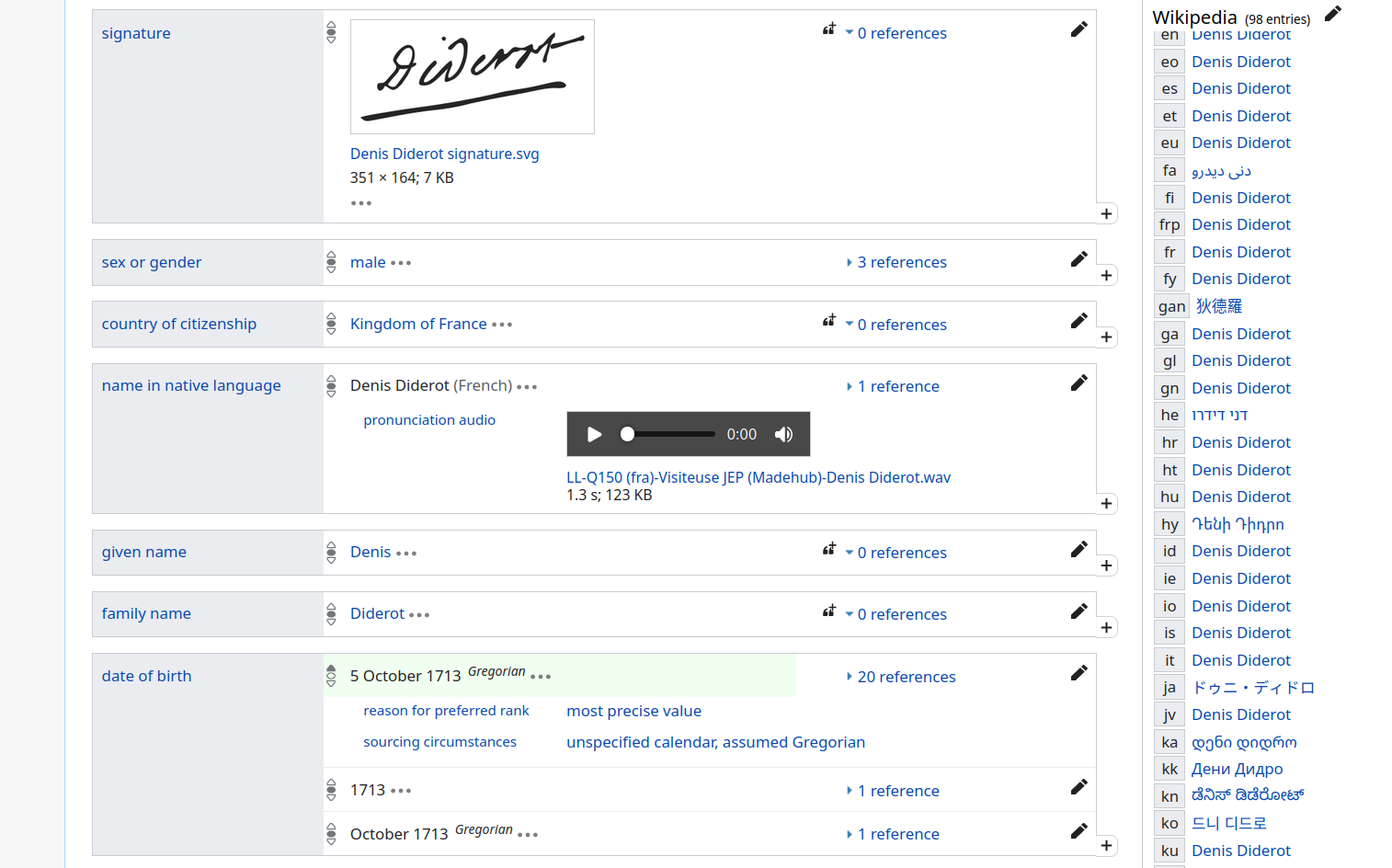

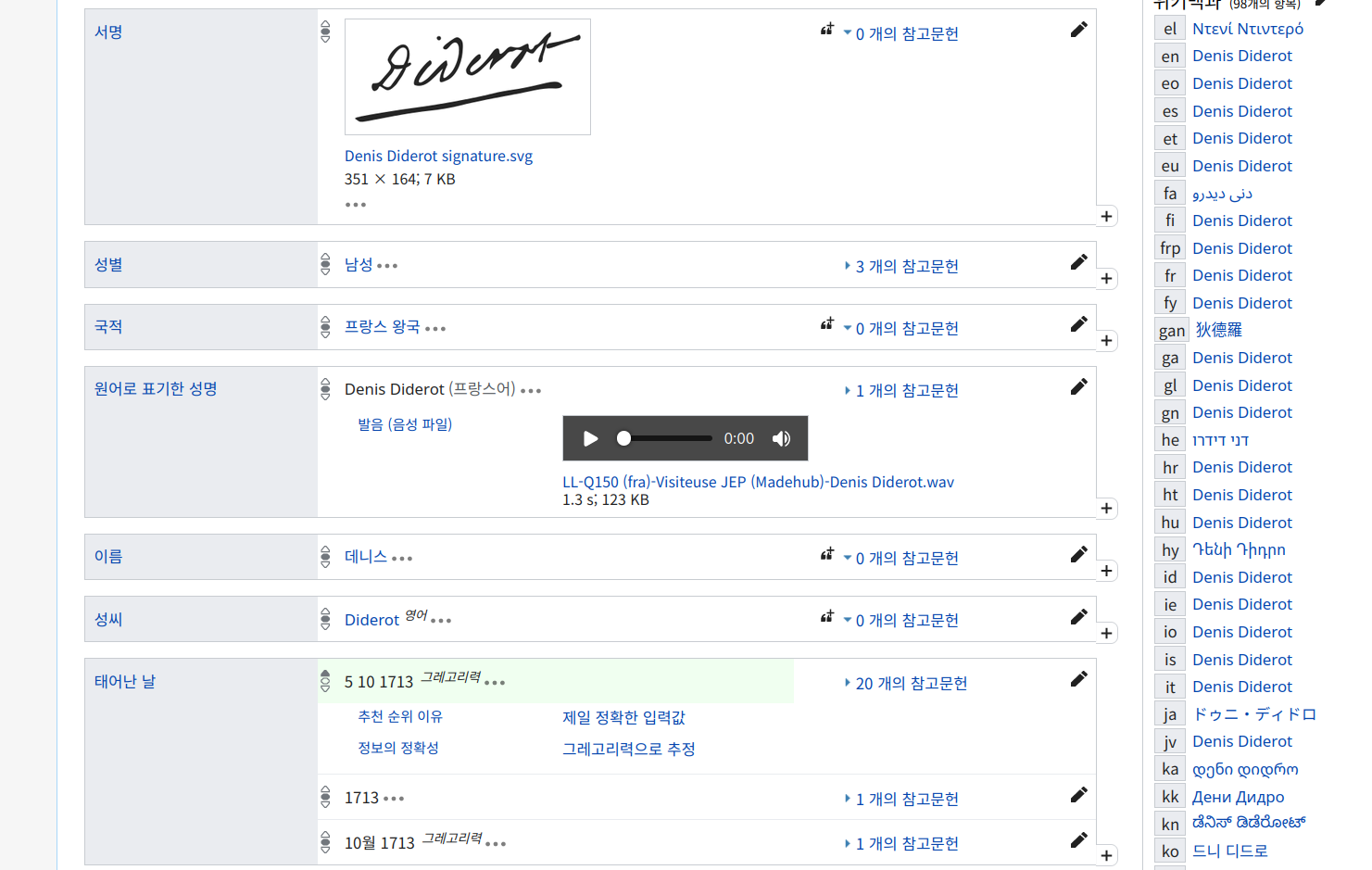

Background: What is Linked Open Data?

Linked Open Data: Multilingualism

Background: What is Literary History?

- Goals of literary history

- Collecting and documenting knowledge of literary history

- Providing explanations for the development of literature

- Organizational principles

- Nations, periods, movements/currents, genres

- Authors and works: themes, forms, relationships

- Similarities and differences, continuities and change

- Functions

- Describe and document literary history

- Explain literary development

Literary History in Linked Open Data

- Building blocks

- Subjects, including persons (author, etc.) and works (primary text, scholarly literature, etc.)

- Objects, including works, but also themes, locations, protagonists, literary genre, etc.

- Predicates, as required, including: author_of, about, sameAs etc.

- Qualifications, e.g: Source (with type, date, URL)

- Some exemplary statement types

- Bibliographic:

[person] author_of [work] - Content-based:

[work] about [theme] - Formal:

[work] narrative_form [type] - and many more.

- Bibliographic:

Wikidata for Literary History

- Idea: Create a “Wikidata for the history of literature”

- Literary history information system

- LOD-based, with explorative interface and SPARQL endpoint

- Approach of an “atomization” of the historical knowledge

- Linking with other knowledge systems (taxonomies, standard data, knowledge bases)

- Key values: human and machine readable, open, collaborative, multilingual

- Compared to Wikidata

- Focused on one domain (French novel, 1750-1800)

- Better coverage / higher density of information for this domain

- Development of a systematic ontology

- much smaller: 300k vs. 1.5 billion statements

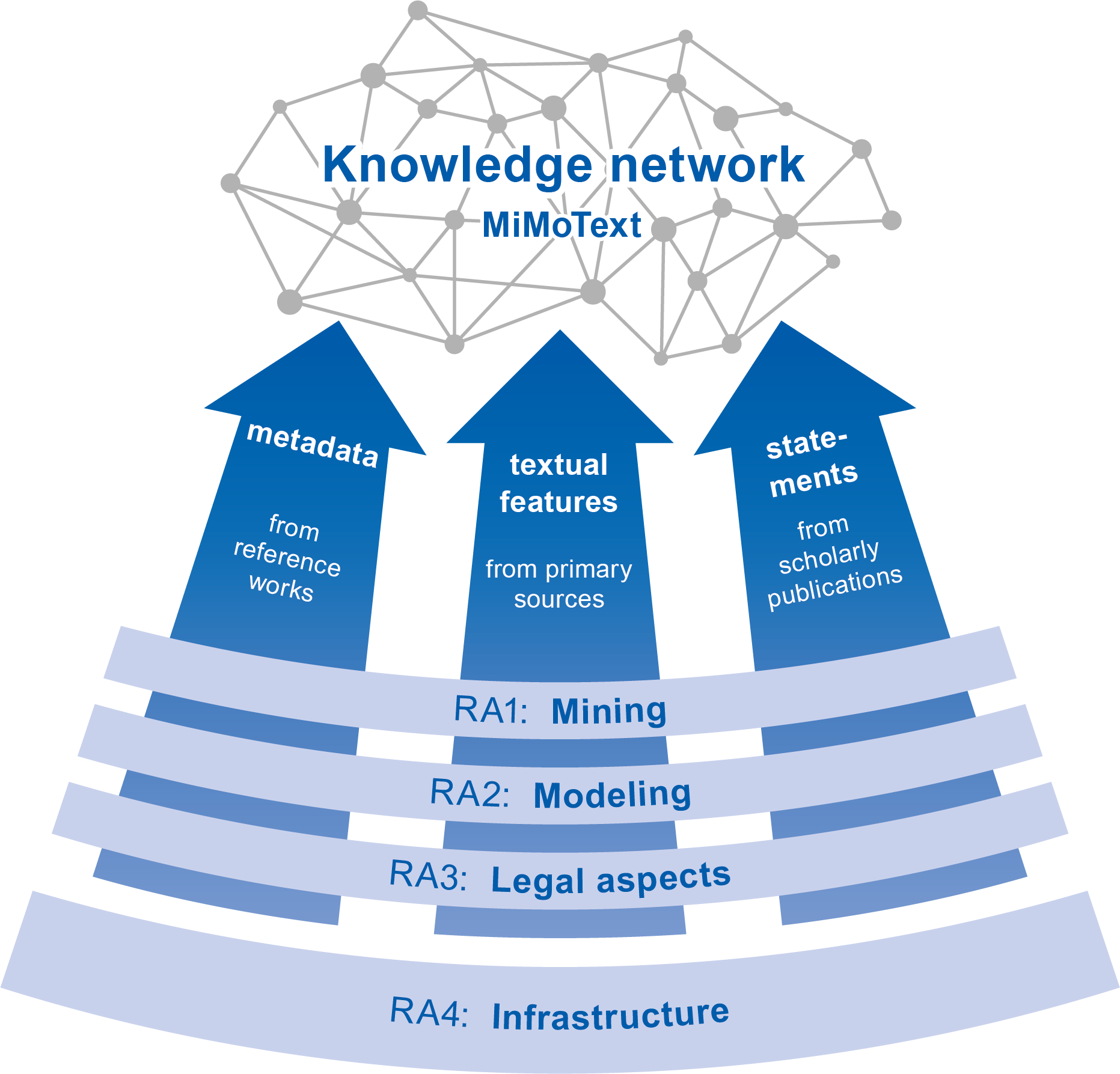

The project ‘Mining and Modeling Text’

Mining: Information Extraction

Pillar 1: Bibliographie du genre romanesque français

Pillar 2: primary literature (novels)

- Corpus of 200 French novels (1750-1800)

- Encoding: in XML-TEI, with metadata, according to ELTeC schema

- Methods of analysis: Topic modeling, NER, stylometry, etc.

Pillar 3: Scholarly Literature

- Annotation guidelines => Manual annotations (using INCEpTION)

- Linking of INCEpTION with MiMoTextBase and Wikidata => disambiguation

- Creation of statements about authors and works (genres, themes, etc.)

- Machine Learning based on the annotated training data

Modeling: Data Modeling

Modular Data Model

- Module 1: Theme

- Module 2: Space

- Module 3: Narrative form

- Module 4: Literary work

- Module 5: Author

- Module 6: Mapping

- Module 7: Referencing

- Module 8: Versioning & publication

- Module 9: Terminology

- Module 10: Bibliography

- Module 11: Scholarly literature

Example: The module on themes

Example: The module on narrative location

Meta-Statements

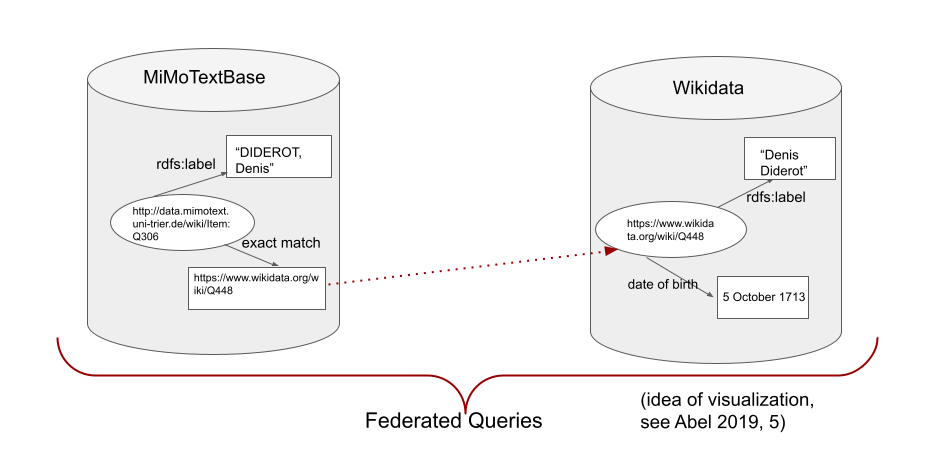

Linking with Wikidata for ‘federated queries’

Result: Queryable Database

The MiMoTextBase

SPARQL endpoint

MiMoText Base: Query for themes in novels

Some sample queries: simple queries

Example queries: visualizations

Sample queries: networked and federated

- Link with catalogue data from French National Library (using BNF id)

- Narrative locations of novels (map)

- Authors by birth year, with portrait)

- Alternative author names from Wikidata infobox

- Network of influences between authors (using ‘influenced by’)

- Querying MiMoText from Wikidata (it works both ways)

- Novels and basic information, from Wikidata

Sample queries: comparative queries

Conclusion

Opportunities & challenges

- Opportunities

- Linking heterogeneous data from different types of sources

- Modeling, collecting and comparing contradictory statements

- Transparency in knowledge production (sources)

- Challenges

- Lack of consensus on relevant statement types in the discipline

- Complexity reduction (triple structure)

- Interoperability (tension ‘Wikiverse’ vs. OWL standard)

Lessons Learned

- Federated queries

- Central element of the LOD vision

- => Making it happen is not trivial (data model, infrastructure)

- Modeling meta statements

- Very important: perspectives / statements, not facts

- => Very different approaches in different technical contexts

- Very important: perspectives / statements, not facts

- Exchange across communities

- Literary Studies vs. Digital Humanities vs. Wikiverse

- => is essential but needs more development

- There is still so much to do!

- => We are continuing this effort in a new project called

‘Linked Open Data in the Humanities’ (LODinG)

- => We are continuing this effort in a new project called

Many thanks for your kind attention

Further resources

- Tutorial: https://docs.mimotext.uni-trier.de

- SPARQL endpoint: https://query.mimotext.uni-trier.de

- MiMoTextBase: https://data.mimotext.uni-trier.de

- MiMoText Ontology: https://github.com/MiMoText/ontology

- Reference publication: ‘Smart Modeling for Digital Literary History’

- Overview of visuals: mimotext.github.io/MiMoTextBase_Tutorial/visualizations.html

References

Martin, Angus, Vivienne G. Mylne, and Richard Frautschi. 1977. Bibliographie du genre romanesque français, 1751-1800. Mandell.

Röttgermann, Julia. 2024. “The Collection of Eighteenth-Century French Novels 1751-1800.” Journal of Open Humanities Data 10 (1): 31. https://doi.org/10.5334/johd.201.

Schöch, Christof. 2013. “Big? Smart? Clean? Messy? Data in the Digital Humanities.” Journal of Digital Humanities 2 (3): 1–19. https://journalofdigitalhumanities.org/2-3/big-smart-clean-messy-data-in-the-humanities/.

Schöch, Christof, Maria Hinzmann, Julia Röttgermann, Katharina Dietz, and Anne Klee. 2022. “Smart Modelling for Literary History.” International Journal of Humanities and Arts Computing 16 (1): 78–93. https://doi.org/10.3366/ijhac.2022.0278.

Bigger Smarter Data Extracting, Modeling and Linking Data for Literary History Christof Schöch (Trier University, Germany) Korea University Seoul, South Korea 23 May 2024